Introduction

Welcome to the nearcore development guide!

The target audience of this guide is developers of nearcore itself. If you are a user of NEAR (either a contract developer, or validator running a node), please refer to the user docs at https://docs.near.org.

This guide is built with mdBook from sources in the nearcore repository. You can edit it by pressing the "edit" icon in the top right corner, we welcome all contributions. The guide is hosted at https://nomicon.io/.

The guide is organized as a collection of loosely coupled chapters -- you don't need to read them in order, feel free to peruse the TOC, and focus on the interesting bits. The chapters are classified into four parts:

- Protocol Specification includes the formal chain, network, and runtime specifications that define the NEAR Protocol blockchain.

- Architecture talks about how the code works. So, for example, if you are interested in how a transaction flows through the system, look there!

- Practices describe, broadly, how we write code. For example, if you want to learn about code style, issue tracking, or debugging performance problems, this is the chapter for you.

- Finally, the Misc part holds various assorted bits and pieces. We are trying to bias ourselves towards writing more docs, so, if you want to document something and it doesn't cleanly map to a category above, just put it in misc!

If you are unsure, start with Architecture Overview and then read Run a Node

Overview

This chapter describes various data structures used by NEAR Protocol.

Data Structures

Access Keys

Access key provides an access for a particular account. Each access key belongs to some account and

is identified by a unique (within the account) public key. Access keys are stored as account_id,public_key in a trie state. Account can have from zero to multiple access keys.

#![allow(unused)] fn main() { pub struct AccessKey { /// The nonce for this access key. /// NOTE: In some cases the access key needs to be recreated. If the new access key reuses the /// same public key, the nonce of the new access key should be equal to the nonce of the old /// access key. It's required to avoid replaying old transactions again. pub nonce: Nonce, /// Defines permissions for this access key. pub permission: AccessKeyPermission, } }

There are 2 types of AccessKeyPermission in NEAR currently: FullAccess and FunctionCall. FullAccess grants permissions to issue any action on the account. This includes DeployContract, Transfer tokens, call functions FunctionCall, Stake and even permission to delete the account DeleteAccountAction. FunctionCall on the other hand, only grants permission to call any or a specific set of methods on one given contract. It has an allowance of $NEAR that can be spent on GAS and transaction fees only. Function call access keys cannot be used to transfer $NEAR.

#![allow(unused)] fn main() { pub enum AccessKeyPermission { FunctionCall(FunctionCallPermission), FullAccess, } }

AccessKeyPermission::FunctionCall

Grants limited permission to make FunctionCall to a specified receiver_id and methods of a particular contract with a limit of allowed balance to spend.

#![allow(unused)] fn main() { pub struct FunctionCallPermission { /// Allowance is a balance limit to use by this access key to pay for function call gas and /// transaction fees. When this access key is used, both account balance and the allowance is /// decreased by the same value. /// `None` means unlimited allowance. /// NOTE: To change or increase the allowance, the old access key needs to be deleted and a new /// access key should be created. pub allowance: Option<Balance>, /// The access key only allows transactions with the given receiver's account id. pub receiver_id: AccountId, /// A list of method names that can be used. The access key only allows transactions with the /// function call of one of the given method names. /// Empty list means any method name can be used. pub method_names: Vec<String>, } }

Account without access keys

If account has no access keys attached it means that it has no owner who can run transactions from its behalf. However, if such accounts has code it can be invoked by other accounts and contracts.

Accounts

Account ID

NEAR Protocol has an account names system. Account ID is similar to a username. Account IDs have to follow the rules.

Account ID Rules

- minimum length is 2

- maximum length is 64

- Account ID consists of Account ID parts separated by

. - Account ID part consists of lowercase alphanumeric symbols separated by either

_or-. - Account ID that is 64 characters long and consists of lowercase hex characters is a specific NEAR-implicit account ID.

- Account ID that is

0xfollowed by 40 lowercase hex characters is a specific ETH-implicit account ID. - Account ID that is

0sfollowed by 40 lowercase hex characters is a specific NEAR-deterministic account ID.

Account names are similar to a domain names.

Top level account (TLA) like near, com, eth can only be created by registrar account (see next section for more details).

Only near can create alice.near. And only alice.near can create app.alice.near and so on.

Note, near can NOT create app.alice.near directly.

Additionally, there is an implicit account creation path.

Regex for a full account ID, without checking for length:

^(([a-z\d]+[\-_])*[a-z\d]+\.)*([a-z\d]+[\-_])*[a-z\d]+$

Top Level Accounts

| Name | Value |

|---|---|

| REGISTRAR_ACCOUNT_ID | registrar |

Top level account names (TLAs) are very valuable as they provide root of trust and discoverability for companies, applications and users. To allow for fair access to them, the top level account names are going to be auctioned off.

Specifically, only REGISTRAR_ACCOUNT_ID account can create new top level accounts (other than implicit accounts). REGISTRAR_ACCOUNT_ID implements standard Account Naming (link TODO) interface to allow create new accounts.

Note: we are not going to deploy registrar auction at launch, instead allow to deploy it by Foundation after initial launch. The link to details of the auction will be added here in the next spec release post MainNet.

Examples

Valid accounts:

ok

bowen

ek-2

ek.near

com

google.com

bowen.google.com

near

illia.cheap-accounts.near

max_99.near

100

near2019

over.9000

a.bro

// Valid, but can't be created, because "a" is too short

bro.a

Invalid accounts:

not ok // Whitespace characters are not allowed

a // Too short

100- // Suffix separator

bo__wen // Two separators in a row

_illia // Prefix separator

.near // Prefix dot separator

near. // Suffix dot separator

a..near // Two dot separators in a row

$$$ // Non alphanumeric characters are not allowed

WAT // Non lowercase characters are not allowed

me@google.com // @ is not allowed (it was allowed in the past)

system // cannot use the system account, see the section on System account below

// TOO LONG:

abcdefghijklmnopqrstuvwxyz.abcdefghijklmnopqrstuvwxyz.abcdefghijklmnopqrstuvwxyz

System account

system is a special account that is only used to identify refund receipts. For refund receipts, we set the predecessor_id to be system to indicate that it is a refund receipt. Users cannot create or access the system account. In fact, this account does not exist as part of the state.

Implicit accounts

Implicit accounts work similarly to Bitcoin/Ethereum accounts. You can reserve an account ID before it's created by generating a corresponding (public, private) key pair locally. The public key maps to the account ID. The corresponding secret key allows you to use the account once it's created on chain.

NEAR-implicit account ID

The account ID is a lowercase hex representation of the public key. An ED25519 public key is 32 bytes long and maps to a 64-character account ID.

Example: a public key in base58 BGCCDDHfysuuVnaNVtEhhqeT4k9Muyem3Kpgq2U1m9HX will map to the account ID 98793cd91a3f870fb126f66285808c7e094afcfc4eda8a970f6648cdf0dbd6de.

ETH-implicit account ID

The account ID is derived from a Secp256K1 public key using the following formula: '0x' + keccak256(public_key)[12:32].hex().

Example: a public key in base58 2KFsZcvNUMBfmTp5DTMmguyeQyontXZ2CirPsb21GgPG3KMhwrkRuNiFCdMyRU3R4KbopMpSMXTFQfLoMkrg4HsT will map to the account ID 0x87b435f1fcb4519306f9b755e274107cc78ac4e3.

Implicit account creation

An account with implicit account ID can only be created by sending a transaction/receipt with a single Transfer action to the implicit account ID receiver:

- The account will be created with the account ID.

- The account balance will have a transfer balance deposited to it.

- If this is NEAR-implicit account, it will have a new full access key with the ED25519-curve public key of

decode_hex(account_id)and nonce(block_height - 1) * MULTIPLIER(to address an issues discussed here). - If this is ETH-implicit account, it will have the Wallet Contract deployed, which can only be used by the owner of the Secp256K1 private key where

'0x' + keccak256(public_key)[12:32].hex()matches the account ID.

Implicit account can not be created using CreateAccount action to avoid being able to hijack the account without having the corresponding private key.

Once a NEAR-implicit account is created it acts as a regular account until it's deleted.

An ETH-implicit account can only be used by calling the methods of the Wallet Contract. It cannot be deleted, nor can a full access key be added. The primary purpose of ETH-implicit accounts is to enable seamless integration of existing Ethereum tools (such as wallets) with the NEAR blockchain.

Wallet Contract

The Wallet Contract (see NEP-518 for more details) functions as a user account and is designed to receive, validate, and execute Ethereum-compatible transactions on the NEAR blockchain.

Without going into details, an Ethereum-compatible wallet user sends a transaction to an RPC endpoint, which wraps it and passes it to the Wallet Contract (on the target account) as an rlp_execute(target: AccountId, tx_bytes_b64: Vec<u8>) contract call.

Then, the contract parses tx_bytes_b64 and verifies it is signed with the private key matching the target ETH-implicit account ID on which the contract is hosted.

Under the hood, the transaction encodes a NEAR-native action. Currently supported actions are:

- Transfer (from ETH-implicit account).

- Function call (call another contract).

- Add

AccessKeywithFunctionCallPermission. This allows adding a relayer's public key to an ETH-implicit account, enabling the relayer to pay the gas fee for transactions from this account. Still, each transaction has to be signed by the owner of the account (corresponding Secp256K1 private key). - Delete

AccessKey.

Deterministic accounts

Deterministic accounts are an advanced kind of implicit account. A normal implicit account has a fixed access key that is implicitly associated with it. Deterministic accounts have a fixed code and fixed initial state associated with it.

State-initializing data of deterministic accounts

The initial state of a deterministic account is fully defined by an instance of DeterministicAccountStateInit.

Key-value pairs in data must be at most max_length_storage_key and max_length_storage_value bytes long,

respectively. These parameters are currently both set to 4Mib (4194304 bytes).

#![allow(unused)] fn main() { pub enum DeterministicAccountStateInit { V1(DeterministicAccountStateInitV1), } pub struct DeterministicAccountStateInitV1 { pub code: GlobalContractIdentifier, pub data: BTreeMap<Vec<u8>, Vec<u8>>, } pub enum GlobalContractIdentifier { CodeHash(CryptoHash) = 0, AccountId(AccountId) = 1, } }

Deterministic account ID

The account ID is derived from a DeterministicAccountStateInit instance.

To derive the deterministic account id, borsh-encode the DeterministicAccountStateInit enum instance into raw

bytes. Then use the following formula: '0s' + keccak256(bytes)[12:32].hex().

Deterministic account creation

Deterministic accounts cannot be created with a CreateAccount nor with a Transfer action.

A special action, DeterministicStateInitAction, is required.

This action validates that the account id matches its initial state.

As with all account types, you have to attach a large enough deposit to cover for the account storage. If the code and

initial state is small enough to fit below the zero-balance limit, no balance is required. The

DeterministicStateInitAction has a field to include a balance specifically to cover storage. Anything above the

required amount to cover storage requirements is refunded.

A Transfer can also deposit a balance before the DeterministicStateInitAction. The account will not be usable,

however, until the DeterministicStateInitAction is also executed.

After successful initialization, the account will have all key-value pairs in data: BTreeMap<Vec<u8>, Vec<u8>> in its

contract storage. The contract code will be set to that of the global contract defined with

code: GlobalContractIdentifier.

Account

Data for a single account is collocated in one shard. The account data consists of the following:

- Balance

- Locked balance (for staking)

- Code of the contract

- Key-value storage of the contract. Stored in a ordered trie

- Access Keys

- Postponed ActionReceipts

- Received DataReceipts

Balances

Total account balance consists of unlocked balance and locked balance.

Unlocked balance is tokens that the account can use for transaction fees, transfers staking and other operations.

Locked balance is the tokens that are currently in use for staking to be a validator or to become a validator. Locked balance may become unlocked at the beginning of an epoch. See Staking for details.

Contracts

A contract (AKA smart contract) is a program in WebAssembly that belongs to a specific account. When account is created, it doesn't have a contract (except ETH-implicit accounts). A contract has to be explicitly deployed, either by the account owner, or during the account creation. A contract can be executed by anyone who calls a method on your account. A contract has access to the storage on your account.

Storage

Every account has its own storage. It's a persistent key-value trie. Keys are ordered in lexicographical order. The storage can only be modified by the contract on the account. Current implementation on Runtime only allows your account's contract to read from the storage, but this might change in the future and other accounts's contracts will be able to read from your storage.

NOTE: Accounts must maintain a minimum amount of value at a rate of 1 NEAR per 100kb of total storage in order to remain responsive. This includes the storage of the account itself, contract code, contract storage, and all access keys. Any account with less than this minimum amount will not be able to maintain a responsive contract and will, instead, return an error related to this mismatch in storage vs. minimum account balance. See Storage Staking in the docs.

Access Keys

An access key grants an access to a account. Each access key on the account is identified by a unique public key. This public key is used to validate signature of transactions. Each access key contains a unique nonce to differentiate or order transactions signed with this access key.

An access key has a permission associated with it. The permission can be one of two types:

FullAccesspermission. It grants full access to the account.FunctionCallpermission. It grants access to only issued function call transactions.

See Access Keys for more details.

Block and Block Header

The data structures for block and block headers are

#![allow(unused)] fn main() { pub struct Block { /// Block Header pub header: BlockHeader, /// Headers of chunk in the block pub chunks: Vec<ShardChunkHeader>, /// Challenges, but they are not used today pub challenges: Challenges, /// Data to confirm the correctness of randomness beacon output pub vrf_value: [u8; 32], pub vrf_proof: [u8; 64], } }

#![allow(unused)] fn main() { pub struct BlockHeader { pub prev_hash: CryptoHash, /// Inner part of the block header that gets hashed, split into two parts, one that is sent /// to light clients, and the rest pub inner_lite: BlockHeaderInnerLite, pub inner_rest: BlockHeaderInnerRest, /// Signature of the block producer. pub signature: Signature, /// Cached value of hash for this block. pub hash: CryptoHash, } }

where BlockHeaderInnerLite and BlockHeaderInnerRest are

#![allow(unused)] fn main() { pub struct BlockHeaderInnerLite { /// Height of this block. pub height: u64, /// Epoch start hash of this block's epoch. /// Used for retrieving validator information pub epoch_id: EpochId, pub next_epoch_id: EpochId, /// Root hash of the state at the previous block. pub prev_state_root: CryptoHash, /// Root of the outcomes of transactions and receipts. pub outcome_root: CryptoHash, /// Timestamp at which the block was built (number of non-leap-nanoseconds since January 1, 1970 0:00:00 UTC). pub timestamp: u64, /// Hash of the next epoch block producers set pub next_bp_hash: CryptoHash, /// Merkle root of block hashes up to the current block. pub block_merkle_root: CryptoHash, } }

#![allow(unused)] fn main() { pub struct BlockHeaderInnerRest { /// Root hash of the chunk receipts in the given block. pub chunk_receipts_root: CryptoHash, /// Root hash of the chunk headers in the given block. pub chunk_headers_root: CryptoHash, /// Root hash of the chunk transactions in the given block. pub chunk_tx_root: CryptoHash, /// Root hash of the challenges in the given block. pub challenges_root: CryptoHash, /// The output of the randomness beacon pub random_value: CryptoHash, /// Validator proposals. pub validator_proposals: Vec<ValidatorStake>, /// Mask for new chunks included in the block pub chunk_mask: Vec<bool>, /// Gas price. Same for all chunks pub gas_price: u128, /// Total supply of tokens in the system pub total_supply: u128, /// List of challenges result from previous block. pub challenges_result: ChallengesResult, /// Last block that has full BFT finality pub last_final_block: CryptoHash, /// Last block that has doomslug finality pub last_ds_final_block: CryptoHash, /// The ordinal of the Block on the Canonical Chain pub block_ordinal: u64, /// All the approvals included in this block pub approvals: Vec<Option<Signature>>, /// Latest protocol version that this block producer has. pub latest_protocol_version: u32, } }

Here CryptoHash is a 32-byte hash and EpochId is a 32-byte identifier.

Block Hash

The hash of a block is computed by

#![allow(unused)] fn main() { sha256(concat( sha256(concat( sha256(borsh(inner_lite)), sha256(borsh(inner_rest)) )), prev_hash )) }

Chunk and Chunk Header

The data structures for chunk and chunk header are

#![allow(unused)] fn main() { pub struct ShardChunkHeader { pub inner: ShardChunkHeaderInner, pub height_included: BlockHeight, /// Signature of the chunk producer. pub signature: Signature, pub hash: ChunkHash, } }

#![allow(unused)] fn main() { pub struct ShardChunk { pub chunk_hash: ChunkHash, pub header: ShardChunkHeader, pub transactions: Vec<SignedTransaction>, pub receipts: Vec<Receipt>, } }

where ShardChunkHeaderInner is

#![allow(unused)] fn main() { pub struct ShardChunkHeaderInner { /// Previous block hash. pub prev_block_hash: CryptoHash, pub prev_state_root: CryptoHash, /// Root of the outcomes from execution transactions and results. pub outcome_root: CryptoHash, pub encoded_merkle_root: CryptoHash, pub encoded_length: u64, pub height_created: u64, /// Shard index. pub shard_id: u64, /// Gas used in this chunk. pub gas_used: u64, /// Gas limit voted by validators. pub gas_limit: u64, /// Total balance burnt in previous chunk pub balance_burnt: u128, /// Outgoing receipts merkle root. pub outgoing_receipts_root: CryptoHash, /// Tx merkle root. pub tx_root: CryptoHash, /// Validator proposals. pub validator_proposals: Vec<ValidatorStake>, } }

Chunk Hash

Chunk hash is computed by

#![allow(unused)] fn main() { sha256( concat( sha256(borsh(inner)), encoded_merkle_root ) ) }

Data Types

type CryptoHash = [u8; 32]

A sha256 or keccak256 hash.

AccountId = String

Account identifier. Provides access to user's state.

type MerkleHash = CryptoHash

Hash used by a struct implementing the Merkle tree.

type ValidatorId = usize

Validator identifier in current group.

type ValidatorMask = [bool]

Mask which validators participated in multi sign.

type StorageUsage = u64

StorageUsage is used to count the amount of storage used by a contract.

type StorageUsageChange = i64

StorageUsageChange is used to count the storage usage within a single contract call.

type Nonce = u64

Nonce for transactions.

type BlockIndex = u64

Index of the block.

type ShardId = u64

Shard index, from 0 to NUM_SHARDS - 1.

type Balance = u128

Balance is type for storing amounts of tokens.

Gas = u64

Gas is a type for storing amount of gas.

Merkle Proofs

Many components of NEAR Protocol rely on Merkle root and Merkle proofs. For an array of sha256 hashes, we define its merkle root as:

CRYPTOHASH_DEFAULT = [0] * 32

def combine_hash(hash1, hash2):

return sha256(hash1 + hash2)

def merkle_root(hashes):

if len(hashes) == 0:

return CRYPTOHASH_DEFAULT

elif len(hashes) == 1:

return hashes[0]

else:

l = hashes.len();

subtree_len = l.next_power_of_two() // 2;

left_root = merkle_root(hashes[0:subtree_len])

right_root = merkle_root(hashes[subtree_len:l])

return combine_hash(left_root, right_root)

Generally, for an array of borsh-serializable object, its merkle root is defined as

def arr_merkle_root(arr):

return merkle_root(list(map(lambda x: sha256(borsh(x)), arr)))

A Merkle proof is defined by:

#![allow(unused)] fn main() { pub struct MerklePathItem { pub hash: MerkleHash, pub direction: Direction, } pub enum Direction { Left, Right, } pub type MerkleProof = Vec<MerklePathItem>; }

The verification of a hash h against a proclaimed merkle root r with proof p is defined by:

def compute_root(h, p):

res = h

for item in p:

if item.direction is Left:

res = combine_hash(item.hash, res)

else:

res = combine_hash(res, item.hash)

return res

assert compute_root(h, p) == r

Transaction

See Transactions documentation in the Runtime Specification section.

Overview

This chapter covers the NEAR Protocol chain specification.

Chain Specification

- Block Processing

- Consensus

- Light Client

- Selecting Chunk and Block Producers

- Transactions in the Blockchain Layer

- Upgradability

Block Processing

This section covers how blocks are processed once they arrive from the network.

Data Structures

Please refer to this section for details about blocks and chunks.

Validity of Block Header

A block header is invalid if any of the following holds:

timestampis invalid due to one of the following:- It is more than 120s ahead of the local time of the machine.

- It is smaller than the timestamp of the previous block.

- Its signature is not valid, i.e, verifying the signature using the public key of the block producer fails

epoch_idis invalid, i.e, it does not match the epoch id of the block computed locally.next_bp_hashis invalid. This could mean one of the following:epoch_id == prev_block.epoch_id && next_bp_hash != prev_block.next_bp_hashepoch_id != prev_block.epoch_id && next_bp_hash != compute_bp_hash(next_epoch_id, prev_hash)wherecompute_bp_hashcomputes the hash of next epoch's validators.

chunk_maskdoes not match the size of the chunk mask is not the same as the number of shards- Approval or finality information is invalid. See consensus for more details.

Validity of Block

A block is invalid if any of the following holds:

- Any of the chunk headers included in the block has an invalid signature.

- State root computed from chunk headers does not match

state_rootin the header. - Receipts root computed from chunk headers does not match

chunk_receipts_rootin the header. - Chunk headers root computed from chunk headers does not match

chunk_headers_rootin the header. - Transactions root computed from chunk headers does not match

chunk_tx_rootin the header. - For some index

i,chunk_mask[i]does not match whether a new chunk from shardiis included in the block. - Its vrf output is invalid

- Its gas price is invalid, i.e, gas priced computed from previous gas price and gas usage from chunks included in the block according to the formula described in economics does not match the gas price in the header.

- Its

validator_proposalsis not valid, which means that it does not match the concatenation of validator proposals from the chunk headers included in the block.

Process a new block

When a new block B is received from the network, the node first check whether it is ready to be processed:

- Its parent has already been processed.

- Header and the block itself are valid

- All the chunk parts are received. More specifically, this means

- For any shard that the node tracks, if there is a new chunk from that shard included in

B, the node has the entire chunk. - For block producers, they have their chunk parts for shards they do not track to guarantee data availability.

- For any shard that the node tracks, if there is a new chunk from that shard included in

Once all the checks are done, the chunks included in B can be applied.

If the chunks are successfully applied, B is saved and if the height of B is higher than the highest known block (head of the chain),

the head is updated.

Apply a chunk

In order to apply a chunk, we first check that the chunk is valid:

def validate_chunk(chunk):

# get the local result of applying the previous chunk (chunk_extra)

prev_chunk_extra = get_chunk_extra(chunk.prev_block_hash, chunk.shard_id)

# check that the post state root of applying the previous chunk matches the prev state root in the current chunk

assert prev_chunk_extra.state_root == chunk.state_root

# check that the merkle root of execution outcomes match

assert prev_chunk_extra.outcome_root == chunk.outcome_root

# check that validator proposals match

assert prev_chunk_extra.validator_proposals == chunk.validator_proposals

# check that gas usage matches

assert prev_chunk_extra.gas_used == chunk.gas_used

# check that balance burnt matches

assert prev_chunk_extra.balance_burnt == chunk.balance_burnt

# check outgoing receipt root matches

assert prev_chunk_extra.outgoing_receipt_root == chunk.outgoing_receipt_root

After this we apply transactions and receipts:

# get the incoming receipts for this shard

incoming_receipts = get_incoming_receipts(block_hash, shard_id)

# apply transactions and receipts and obtain the result, which includes state changes and execution outcomes

apply_result = apply_transactions(shard_id, state_root, chunk.transactions, incoming_receipts, other_block_info)

# save apply result locally

save_result(apply_result)

Catchup

If a node validates shard X in epoch T and needs to validate shard Y in epoch T+1 due to validator rotation, it has to download the state of that shard before epoch T+1 starts to be able to do so.

To accomplish this, the node will start downloading the state of shard Y at the beginning of epoch T and, after it has successfully downloaded the state, will apply all the chunks for shard Y in epoch T until the current block.

From there the node will apply chunks for both shard X and shard Y for the rest of the epoch.

Consensus

Definitions and notation

For the purpose of maintaining consensus, transactions are grouped into blocks. There is a single preconfigured block called genesis block. Every block except has a link pointing to the previous block , where is the block, and is reachable from every block by following those links (that is, there are no cycles).

The links between blocks give rise to a partial order: for blocks and , means that and is reachable from by following links to previous blocks, and means that or . The relations and are defined as the reflected versions of and , respectively. Finally, means that either , or , and means the opposite.

A chain is a set of blocks reachable from block , which is called its tip. That is, . For any blocks and , there is a chain that both and belong to iff . In this case, and are said to be on the same chain.

Each block has an integer height . It is guaranteed that block heights are monotonic (that is, for any block , ), but they need not be consecutive. Also, may not be zero. Each node keeps track of a valid block with the largest height it knows about, which is called its head.

Blocks are grouped into epochs. In a chain, the set of blocks that belongs to some epoch forms a contiguous range: if blocks and such that belong to the same epoch, then every block such that also belongs to that epoch. Epochs can be identified by sequential indices: belongs to an epoch with index , and for every other block , the index of its epoch is either the same as that of , or one greater.

Each epoch is associated with a set of block producers that are validating blocks in that epoch, as well as an assignment of block heights to block producers that are responsible for producing a block at that height. A block producer responsible for producing a block at height is called block proposer at . This information (the set and the assignment) for an epoch with index is determined by the last block of the epoch with index . For epochs with indices and , this information is preconfigured. Therefore, if two chains share the last block of some epoch, they will have the same set and the same assignment for the next two epochs, but not necessarily for any epoch after that.

The consensus protocol defines a notion of finality. Informally, if a block is final, any future final blocks may only be built on top of . Therefore, transactions in and preceding blocks are never going to be reversed. Finality is not a function of a block itself, rather, a block may be final or not final in some chain it is a member of. Specifically, , where , means that is final in . A block that is final in a chain is final in all of its extensions: specifically, if is true, then is also true for all .

Data structures

The fields in the Block header relevant to the consensus process are:

#![allow(unused)] fn main() { struct BlockHeader { ... prev_hash: BlockHash, height: BlockHeight, epoch_id: EpochId, last_final_block_hash: BlockHash, approvals: Vec<Option<Signature>> ... } }

Block producers in the particular epoch exchange many kinds of messages. The two kinds that are relevant to the consensus are Blocks and Approvals. The approval contains the following fields:

#![allow(unused)] fn main() { enum ApprovalInner { Endorsement(BlockHash), Skip(BlockHeight), } struct Approval { inner: ApprovalInner, target_height: BlockHeight, signature: Signature, account_id: AccountId } }

Where the parameter of the Endorsement is the hash of the approved block, the parameter of the Skip is the height of the approved block, target_height is the specific height at which the approval can be used (an approval with a particular target_height can be only included in the approvals of a block that has height = target_height), account_id is the account of the block producer who created the approval, and signature is their signature on the tuple (inner, target_height).

Approvals Requirements

Every block except the genesis block must logically contain approvals of a form described in the next paragraph from block producers whose cumulative stake exceeds of the total stake in the current epoch, and in specific conditions described in section epoch switches also the approvals of the same form from block producers whose cumulative stake exceeds of the total stake in the next epoch.

The approvals logically included in the block must be an Endorsement with the hash of if and only if , otherwise it must be a Skip with the height of . See this section below for details on why the endorsements must contain the hash of the previous block, and skips must contain the height.

Note that since each approval that is logically stored in the block is the same for each block producer (except for the account_id of the sender and the signature), it is redundant to store the full approvals. Instead physically we only store the signatures of the approvals. The specific way they are stored is the following: we first fetch the ordered set of block producers from the current epoch. If the block is on the epoch boundary and also needs to include approvals from the next epoch (see epoch switches), we add new accounts from the new epoch

def get_accounts_for_block_ordered(h, prev_block):

cur_epoch = get_next_block_epoch(prev_block)

next_epoch = get_next_block_next_epoch(prev_block)

account_ids = get_epoch_block_producers_ordered(cur_epoch)

if next_block_needs_approvals_from_next_epoch(prev_block):

for account_id in get_epoch_block_producers_ordered(next_epoch):

if account_id not in account_ids:

account_ids.append(account_id)

return account_ids

The block then contains a vector of optional signatures of the same or smaller size than the resulting set of account_ids, with each element being None if the approval for such account is absent, or the signature on the approval message if it is present. It's easy to show that the actual approvals that were signed by the block producers can easily be reconstructed from the information available in the block, and thus the signatures can be verified. If the vector of signatures is shorter than the length of account_ids, the remaining signatures are assumed to be None.

Messages

On receipt of the approval message the participant just stores it in the collection of approval messages.

def on_approval(self, approval):

self.approvals.append(approval)

Whenever a participant receives a block, the operations relevant to the consensus include updating the head and initiating a timer to start sending the approvals on the block to the block producers at the consecutive target_heights. The timer delays depend on the height of the last final block, so that information is also persisted.

def on_block(self, block):

header = block.header

if header.height <= self.head_height:

return

last_final_block = store.get_block(header.last_final_block_hash)

self.head_height = header.height

self.head_hash = block.hash()

self.largest_final_height = last_final_block.height

self.timer_height = self.head_height + 1

self.timer_started = time.time()

self.endorsement_pending = True

The timer needs to be checked periodically, and contain the following logic:

def get_delay(n):

min(MAX_DELAY, MIN_DELAY + DELAY_STEP * (n-2))

def process_timer(self):

now = time.time()

skip_delay = get_delay(self.timer_height - self.largest_final_height)

if self.endorsement_pending and now > self.timer_started + ENDORSEMENT_DELAY:

if self.head_height >= self.largest_target_height:

self.largest_target_height = self.head_height + 1

self.send_approval(head_height + 1)

self.endorsement_pending = False

if now > self.timer_started + skip_delay:

assert not self.endorsement_pending

self.largest_target_height = max(self.largest_target_height, self.timer_height + 1)

self.send_approval(self.timer_height + 1)

self.timer_started = now

self.timer_height += 1

def send_approval(self, target_height):

if target_height == self.head_height + 1:

inner = Endorsement(self.head_hash)

else:

inner = Skip(self.head_height)

approval = Approval(inner, target_height)

send(approval, to_whom = get_block_proposer(self.head_hash, target_height))

Where get_block_proposer returns the next block proposer given the previous block and the height of the next block.

It is also necessary that ENDORSEMENT_DELAY < MIN_DELAY. Moreover, while not necessary for correctness, we require that ENDORSEMENT_DELAY * 2 <= MIN_DELAY.

Block Production

We first define a convenience function to fetch approvals that can be included in a block at particular height:

def get_approvals(self, target_height):

return [approval for approval

in self.approvals

if approval.target_height == target_height and

(isinstance(approval.inner, Skip) and approval.prev_height == self.head_height or

isinstance(approval.inner, Endorsement) and approval.prev_hash == self.head_hash)]

A block producer assigned for a particular height produces a block at that height whenever they have get_approvals return approvals from block producers whose stake collectively exceeds 2/3 of the total stake.

Finality condition

A block is final in , where , when either or there is a block such that and . That is, either is the genesis block, or includes at least two blocks on top of , and these three blocks ( and the two following blocks) have consecutive heights.

Epoch switches

There's a parameter that defines the minimum length of an epoch. Suppose that a particular epoch started at height , and say the next epoch will be . Say is a set of block producers in epoch . Say is the highest final block in . The following are the rules of what blocks contain approvals from what block producers, and belong to what epoch.

- Any block with is in the epoch and must have approvals from more than of (stake-weighted).

- Any block with for which is in the epoch and must logically include approvals from both more than of and more than of (both stake-weighted).

- The first block with is in the epoch and must logically include approvals from more than of (stake-weighted).

(see the definition of logically including approvals in approval requirements)

Safety

Note that with the implementation above a honest block producer can never produce two endorsements with the same prev_height (call this condition conflicting endorsements), neither can they produce a skip message s and an endorsement e such that s.prev_height < e.prev_height and s.target_height >= e.target_height (call this condition conflicting skip and endorsement).

Theorem Suppose that there are blocks , , and such that , and . Then, more than of the block producer in some epoch must have signed either conflicting endorsements or conflicting skip and endorsement.

Proof Without loss of generality, we can assume that these blocks are chosen such that their heights are smallest possible. Specifically, we can assume that and . Also, letting be the highest block that is an ancestor of both and , we can assume that there is no block such that and or and .

Lemma There is such an epoch that all blocks such that or include approvals from more than of the block producers in .

Proof There are two cases.

Case 1: Blocks , and are all in the same epoch. Because the set of blocks in a given epoch in a given chain is a contiguous range, all blocks between them (specifically, all blocks such that or ) are also in the same epoch, so all those blocks include approvals from more than of the block producers in that epoch.

Case 2: Blocks , and are not all in the same epoch. Suppose that and are in different epochs. Let be the epoch of and be the preceding epoch ( cannot be in the same epoch as the genesis block). Let and be the first and the last block of in . Then, there must exist a block in epoch such that . Because , we have , and since there are no final blocks such that , we conclude that . Because there are no epochs between and , we conclude that is in epoch . Also, . Thus, any block after and until the end of must include approvals from more than of the block producers in . Applying the same argument to , we can determine that is either in or , and in both cases all blocks such that include approvals from more than of block producers in (the set of block producers in is the same in and because the last block of the epoch preceding , if any, is before and thus is shared by both chains). The case where and are in the same epoch, but and are in different epochs is handled similarly. Thus, the lemma is proven.

Now back to the theorem. Without loss of generality, assume that . On the one hand, if doesn't include a block at height , then the first block at height greater than must include skips from more than of the block producers in which conflict with endorsements in , therefore, more than of the block producers in must have signed conflicting skip and endorsement. Similarly, if doesn't include a block at height , more than of the block producers in signed both an endorsement in and a skip in the first block in at height greater than . On the other hand, if includes both a block at height and a block at height , the latter must include endorsements for the former, which conflict with endorsements for . Therefore, more than of the block producers in must have signed conflicting endorsements. Thus, the theorem is proven.

Liveness

See the proof of liveness in Doomslug Whitepaper and the recent Nightshade sharding protocol.

The consensus in this section differs in that it requires two consecutive blocks with endorsements. The proof in the linked paper trivially extends, by observing that once the delay is sufficiently long for a honest block producer to collect enough endorsements, the next block producer ought to have enough time to collect all the endorsements too.

Approval condition

The approval condition above

Any valid block must logically include approvals from block producers whose cumulative stake exceeds of the total stake in the epoch. For a block and its previous block each approval in must be an

Endorsementwith the hash of if and only ifB.height == B'.height + 1, otherwise it must be aSkipwith the height of .

Is more complex that desired, and it is tempting to unify the two conditions. Unfortunately, they cannot be unified.

It is critical that for endorsements each approval has the prev_hash equal to the hash of the previous block, because otherwise the safety proof above doesn't work, in the second case the endorsements in and can be the very same approvals.

It is critical that for the skip messages we do not require the hashes in the approvals to match the hash of the previous block, because otherwise a malicious actor can create two blocks at the same height, and distribute them such that half of the block producers have one as their head, and the other half has the other. The two halves of the block producers will be sending skip messages with different prev_hash but the same prev_height to the future block producers, and if there's a requirement that the prev_hash in the skip matches exactly the prev_hash of the block, no block producer will be able to create their blocks.

Light Client

The state of the light client is defined by:

BlockHeaderInnerLiteViewfor the current head (which containsheight,epoch_id,next_epoch_id,prev_state_root,outcome_root,timestamp, the hash of the block producers set for the next epochnext_bp_hash, and the merkle root of all the block hashesblock_merkle_root);- The set of block producers for the current and next epochs.

The epoch_id refers to the epoch to which the block that is the current known head belongs, and next_epoch_id is the epoch that will follow.

Light clients operate by periodically fetching instances of LightClientBlockView via particular RPC end-point described below.

Light client doesn't need to receive LightClientBlockView for all the blocks. Having the LightClientBlockView for block B is sufficient to be able to verify any statement about state or outcomes in any block in the ancestry of B (including B itself). In particular, having the LightClientBlockView for the head is sufficient to locally verify any statement about state or outcomes in any block on the canonical chain.

However, to verify the validity of a particular LightClientBlockView, the light client must have verified a LightClientBlockView for at least one block in the preceding epoch, thus to sync to the head the light client will have to fetch and verify a LightClientBlockView per epoch passed.

Validating Light Client Block Views

#![allow(unused)] fn main() { pub enum ApprovalInner { Endorsement(CryptoHash), Skip(BlockHeight) } pub struct ValidatorStakeView { pub account_id: AccountId, pub public_key: PublicKey, pub stake: Balance, } pub struct BlockHeaderInnerLiteView { pub height: BlockHeight, pub epoch_id: CryptoHash, pub next_epoch_id: CryptoHash, pub prev_state_root: CryptoHash, pub outcome_root: CryptoHash, pub timestamp: u64, pub next_bp_hash: CryptoHash, pub block_merkle_root: CryptoHash, } pub struct LightClientBlockLiteView { pub prev_block_hash: CryptoHash, pub inner_rest_hash: CryptoHash, pub inner_lite: BlockHeaderInnerLiteView, } pub struct LightClientBlockView { pub prev_block_hash: CryptoHash, pub next_block_inner_hash: CryptoHash, pub inner_lite: BlockHeaderInnerLiteView, pub inner_rest_hash: CryptoHash, pub next_bps: Option<Vec<ValidatorStakeView>>, pub approvals_after_next: Vec<Option<Signature>>, } }

Recall that the hash of the block is

#![allow(unused)] fn main() { sha256(concat( sha256(concat( sha256(borsh(inner_lite)), sha256(borsh(inner_rest)) )), prev_hash )) }

The fields prev_block_hash, next_block_inner_hash and inner_rest_hash are used to reconstruct the hashes of the current and next block, and the approvals that will be signed, in the following way (where block_view is an instance of LightClientBlockView):

def reconstruct_light_client_block_view_fields(block_view):

current_block_hash = sha256(concat(

sha256(concat(

sha256(borsh(block_view.inner_lite)),

block_view.inner_rest_hash,

)),

block_view.prev_block_hash

))

next_block_hash = sha256(concat(

block_view.next_block_inner_hash,

current_block_hash

))

approval_message = concat(

borsh(ApprovalInner::Endorsement(next_block_hash)),

little_endian(block_view.inner_lite.height + 2)

)

return (current_block_hash, next_block_hash, approval_message)

The light client updates its head with the information from LightClientBlockView iff:

- The height of the block is higher than the height of the current head;

- The epoch of the block is equal to the

epoch_idornext_epoch_idknown for the current head; - If the epoch of the block is equal to the

next_epoch_idof the head, thennext_bpsis notNone; approvals_after_nextcontain valid signatures onapproval_messagefrom the block producers of the corresponding epoch (see next section);- The signatures present in

approvals_after_nextcorrespond to more than 2/3 of the total stake (see next section). - If

next_bpsis not none,sha256(borsh(next_bps))corresponds to thenext_bp_hashininner_lite.

def validate_and_update_head(block_view):

global head

global epoch_block_producers_map

current_block_hash, next_block_hash, approval_message = reconstruct_light_client_block_view_fields(block_view)

# (1)

if block_view.inner_lite.height <= head.inner_lite.height:

return False

# (2)

if block_view.inner_lite.epoch_id not in [head.inner_lite.epoch_id, head.inner_lite.next_epoch_id]:

return False

# (3)

if block_view.inner_lite.epoch_id == head.inner_lite.next_epoch_id and block_view.next_bps is None:

return False

# (4) and (5)

total_stake = 0

approved_stake = 0

epoch_block_producers = epoch_block_producers_map[block_view.inner_lite.epoch_id]

for maybe_signature, block_producer in zip(block_view.approvals_after_next, epoch_block_producers):

total_stake += block_producer.stake

if maybe_signature is None:

continue

approved_stake += block_producer.stake

if not verify_signature(

public_key: block_producer.public_key,

signature: maybe_signature,

message: approval_message

):

return False

threshold = total_stake * 2 // 3

if approved_stake <= threshold:

return False

# (6)

if block_view.next_bps is not None:

if sha256(borsh(block_view.next_bps)) != block_view.inner_lite.next_bp_hash:

return False

epoch_block_producers_map[block_view.inner_lite.next_epoch_id] = block_view.next_bps

head = block_view

Signature verification

To simplify the protocol we require that the next block and the block after next are both in the same epoch as the block that LightClientBlockView corresponds to. It is guaranteed that each epoch has at least one final block for which the next two blocks that build on top of it are in the same epoch.

By construction by the time the LightClientBlockView is being validated, the block producers set for its epoch is known. Specifically, when the first light client block view of the previous epoch was processed, due to (3) above the next_bps was not None, and due to (6) above it was corresponding to the next_bp_hash in the block header.

The sum of all the stakes of next_bps in the previous epoch is total_stake referred to in (5) above.

The signatures in the LightClientBlockView::approvals_after_next are signatures on approval_message. The i-th signature in approvals_after_next, if present, must validate against the i-th public key in next_bps from the previous epoch. approvals_after_next can contain fewer elements than next_bps in the previous epoch.

approvals_after_next can also contain more signatures than the length of next_bps in the previous epoch. This is due to the fact that, as per consensus specification, the last blocks in each epoch contain signatures from both the block producers of the current epoch, and the next epoch. The trailing signatures can be safely ignored by the light client implementation.

Proof Verification

Transaction Outcome Proofs

To verify that a transaction or receipt happens on chain, a light client can request a proof through rpc by providing id, which is of type

#![allow(unused)] fn main() { pub enum TransactionOrReceiptId { Transaction { hash: CryptoHash, sender: AccountId }, Receipt { id: CryptoHash, receiver: AccountId }, } }

and the block hash of light client head. The rpc will return the following struct

#![allow(unused)] fn main() { pub struct RpcLightClientExecutionProofResponse { /// Proof of execution outcome pub outcome_proof: ExecutionOutcomeWithIdView, /// Proof of shard execution outcome root pub outcome_root_proof: MerklePath, /// A light weight representation of block that contains the outcome root pub block_header_lite: LightClientBlockLiteView, /// Proof of the existence of the block in the block merkle tree, /// which consists of blocks up to the light client head pub block_proof: MerklePath, } }

which includes everything that a light client needs to prove the execution outcome of the given transaction or receipt.

Here ExecutionOutcomeWithIdView is

#![allow(unused)] fn main() { pub struct ExecutionOutcomeWithIdView { /// Proof of the execution outcome pub proof: MerklePath, /// Block hash of the block that contains the outcome root pub block_hash: CryptoHash, /// Id of the execution (transaction or receipt) pub id: CryptoHash, /// The actual outcome pub outcome: ExecutionOutcomeView, } }

The proof verification can be broken down into two steps, execution outcome root verification and block merkle root verification.

Execution Outcome Root Verification

If the outcome root of the transaction or receipt is included in block H, then outcome_proof includes the block hash

of H, as well as the merkle proof of the execution outcome in its given shard. The outcome root in H can be

reconstructed by

shard_outcome_root = compute_root(sha256(borsh(execution_outcome)), outcome_proof.proof)

block_outcome_root = compute_root(sha256(borsh(shard_outcome_root)), outcome_root_proof)

This outcome root must match the outcome root in block_header_lite.inner_lite.

Block Merkle Root Verification

Recall that block hash can be computed from LightClientBlockLiteView by

#![allow(unused)] fn main() { sha256(concat( sha256(concat( sha256(borsh(inner_lite)), sha256(borsh(inner_rest)) )), prev_hash )) }

The expected block merkle root can be computed by

block_hash = compute_block_hash(block_header_lite)

block_merkle_root = compute_root(block_hash, block_proof)

which must match the block merkle root in the light client block of the light client head.

RPC end-points

Light Client Block

There's a single end-point that full nodes exposed that light clients can use to fetch new LightClientBlockViews:

http post http://127.0.0.1:3030/ jsonrpc=2.0 method=next_light_client_block params:="[<last known hash>]" id="dontcare"

The RPC returns the LightClientBlock for the block as far into the future from the last known hash as possible for the light client to still accept it. Specifically, it either returns the last final block of the next epoch, or the last final known block. If there's no newer final block than the one the light client knows about, the RPC returns an empty result.

A standalone light client would bootstrap by requesting next blocks until it receives an empty result, and then periodically request the next light client block.

A smart contract-based light client that enables a bridge to NEAR on a different blockchain naturally cannot request blocks itself. Instead external oracles query the next light client block from one of the full nodes, and submit it to the light client smart contract. The smart contract-based light client performs the same checks described above, so the oracle doesn't need to be trusted.

Light Client Proof

The following rpc end-point returns RpcLightClientExecutionProofResponse that a light client needs for verifying execution outcomes.

For transaction execution outcome, the rpc is

http post http://127.0.0.1:3030/ jsonrpc=2.0 method=EXPERIMENTAL_light_client_proof params:="{"type": "transaction", "transaction_hash": <transaction_hash>, "sender_id": <sender_id>, "light_client_head": <light_client_head>}" id="dontcare"

For receipt execution outcome, the rpc is

http post http://127.0.0.1:3030/ jsonrpc=2.0 method=EXPERIMENTAL_light_client_proof params:="{"type": "receipt", "receipt_id": <receipt_id>, "receiver_id": <receiver_id>, "light_client_head": <light_client_head>}" id="dontcare"

Selecting Chunk and Block Producers

Background

Near is intended to be a sharded blockchain. At the time of writing (March 2021), challenges (an important security feature for a sharded network) are not fully implemented. As a stepping stone towards the full sharded solution, Near will go through a phase called "Simple Nightshade". In this protocol, block producers will track all shards (i.e. validate all transactions, eliminating the need for challenges), and there will be an additional type of participant called a "chunk-only producer" which tracks only a single shard. A block includes one chunk for each shard, and it is the chunks which include the transactions that were executed for its associated shard. The purpose of this design is to allow decentralization (running a node which supports a chunk-only producer should be possible for a large number of participants) while maintaining security (since block producers track all shards). For more details on chunks see the subsequent chapter on Transactions. Note: the purpose of the "chunk-only" nomenclature is to reduce confusion since block producers will also produce chunks some times (they track all shards, so they will be able to produce chunks for any shard easily); thus the key distinction is that chunk-only producers only produce chunks, i.e. never produce blocks.

Near is a permission-less blockchain, so anyone (with sufficient stake) can become a chunk-only producer, or a block producer. In this section we outline the algorithm by which chunk-only producers and block producers are selected in each epoch from the proposals of participants in the network. Additionally, we will specify the algorithm for assigning those chunk-only producers and block producers to be the one responsible for producing the chunk/block at each height and for each shard.

There are several desiderata for these algorithms:

- Larger stakes should be preferred (more staked tokens means more security)

- The frequency with which a given participant is selected to produce a particular chunk/block is proportional to that participant's stake

- All participants selected as chunk/block producers should be selected to produce at least one chunk/block during the epoch

- It should be possible to determine which chunk/block producer is supposed to produce the chunk/block at height , for any within the epoch, in constant time

- The block producer chosen at height should have been a chunk producer for some shard at height , this minimizes network communication between chunk producers and block producers

- The number of distinct chunk-only/block producers should be as large as is allowed by the scalability in the consensus algorithm (too large and the system would be too slow, too small and the system would be too centralized)

Note: By "distinct block producers" we mean the number of different signing keys. We recognize it is possible for a single "logical entity" to split their stake into two or more proposals (Sybil attack), however steps to prevent this kind of attack against centralization are out of scope for this document.

Assumptions

- The maximum number of distinct chunk-only producers and block producers supported by the consensus

algorithm is a fixed constant. This will be a parameter of the protocol itself (i.e. all nodes

must agree on the constant). In this document, we will denote the maximum number of chunk-only

producers as

MAX_NUM_CPand the maximum number of block producers byMAX_NUM_BP. - The minimum number of blocks in the epoch is known at the time of block producer selection. This

minimum does not need to be incredibly accurate, but we will assume it is within a factor of 2 of

the actual number of blocks in the epoch. In this document we will refer to this as the "length of

the epoch", denoted by

epoch_length. - To meet the requirement that any chosen validator will be selected to produce at least one

chunk/block in the epoch, we assume it is acceptable for the probability of this not happening

to be sufficiently low. Let

PROBABILITY_NEVER_SELECTEDbe a protocol constant which gives the maximum allowable probability that the chunk-only/block producer with the least stake will never be selected to produce a chunk/block during the epoch. We will additionally assume the chunk/block producer assigned to make each chunk/block is chosen independently, and in proportion to the participant's stake. Therefore, the probability that the block producer with least stake is never chosen is given by the expression , where is the least stake of any block producer and is the total relevant stake (what stake is "relevant" depends on whether the validator is a chunk-only producer or a block producer; more details below). Hence, the algorithm will enforce the condition .

In mainnet and testnet, epoch_length is set to 43200. Let ,

we obtain, .

Algorithm for selecting block and chunk producers

A potential validator cannot specify whether they want to become a block producer or a chunk-only producer. There is only one type of proposal. The same algorithm is used for selecting block producers and chunk producers, but with different thresholds. The threshold for becoming block producers is higher, so if a node is selected as a block producer, it will also be a chunk producer, but not the other way around. Validators who are selected as chunk producers but not block producers are chunk-only producers.

select_validators

Input

max_num_validators: u16max number of validators to be selectedmin_stake_fraction: Ratio<u128>minimum stake ratio for selected validatorvalidator_proposals: Vec<ValidatorStake>(proposed stakes for the next epoch from nodes sending staking transactions)

Output

(validators: Vec<ValidatorStake>, sampler: WeightedIndex)

Steps

sorted_proposals =

sorted_descending(validator_proposals, key=lambda v: (v.stake, v.account_id))

total_stake = 0

validators = []

for v in sorted_proposals[0:max_num_validators]:

total_stake += v.stake

if (v.stake / total_stake) > min_stake_fraction:

validators.append(v)

else:

break

validator_sampler = WeightedIndex([v.stake for v in validators])

return (validators, validator_sampler)

Algorithm for selecting block producers

Input

MAX_NUM_BP: u16Max number of block producers, see Assumptionsmin_stake_fraction: Ratio<u128>, see Assumptionsvalidator_proposals: Vec<ValidatorStake>(proposed stakes for the next epoch from nodes sending staking transactions)

select_validators(MAX_NUM_BP, min_stake_fraction, validator_proposals)

Algorithm for selecting chunk producers

Input

MAX_NUM_CP: u16max number of chunk producers, see Assumptionsmin_stake_fraction: Ratio<u128>, see Assumptionsnum_shards: u64number of shardsvalidator_proposals: Vec<ValidatorStake>(proposed stakes for the next epoch from nodes sending staking transactions)

select_validators(MAX_NUM_CP, min_stake_fraction/num_shards, validator_proposals)

The reasoning for using min_stake_fraction/num_shards as the threshold here is that

we will assign chunk producers to shards later and the algorithm (described below) will try to assign

them in a way that the total stake in each shard is distributed as evenly as possible.

So the total stake in each shard will be roughly be total_stake_all_chunk_producers / num_shards.

Algorithm for assigning chunk producers to shards

Note that block producers are a subset of chunk producers, so this algorithm will also assign block producers to shards. This also means that a block producer may only be assigned to a subset of shards. For the security of the protocol, all block producers must track all shards, even if they are not assigned to produce chunks for all shards. We enforce that in the implementation level, not the protocol level. A validator node will panic if it doesn't track all shards.

Input

chunk_producers: Vec<ValidatorStake>num_shards: usizemin_validators_per_shard: usize

Output

validator_shard_assignments: Vec<Vec<ValidatorStake>>- -th element gives the validators assigned to shard

Steps

- While any shard has fewer than

min_validators_per_shardvalidators assigned to it:- Let

cp_ibe the next element ofchunk_producers(cycle back to the beginning as needed)- Note: if there are more shards than chunk producers, then some chunk producers will be assigned to multiple shards. This is undesirable because we want each chunk-only producer to be a assigned to exactly one shard. However, block producers are also chunk producers, so even if we must wrap around a little, chunk-only producers may still not be assigned multiple shards. Moreover, we assume that in practice there will be many more chunk producers than shards (in particular because block producers are also chunk producers).

- Let

shard_idbe the shard with the fewest number of assigned validators such thatcp_ihas not been assigned toshard_id - Assign

cp_itoshard_id

- Let

- While there are any validators which have not been assigned to any shard:

- Let

cp_ibe the next validator not assigned to any shard - Let

shard_idbe the shard with the least total stake (total stake = sum of stakes of all validators assigned to that shard) - Assign

cp_itoshard_id

- Let

- Return the shard assignments

In addition to the above description, we have a proof-of-concept (PoC) on GitHub. Note: this PoC has not been updated since the change to Simple Nightshade, so it assumes we are assigning block producers to shards. However, the same algorithm works to assign chunk producers to shards; it is only a matter of renaming variables referencing "block producers" to reference "chunk producers" instead.

Algorithm for sampling validators proportional to stake

We sample validators with probability proportional to their stake using the following data structure.

weighted_sampler: WeightedIndex- Allow sampling

- This structure will be based on the WeightedIndex implementation (see a description of Vose's Alias Method for details)

This algorithm is applied using both chunk-only producers and block producers in the subsequent algorithms for selecting a specific block producer and chunk producer at each height.

Input

rng_seed: [u8; 32]- See usages of this algorithm below to see how this seed is generated

validators: Vec<ValidatorStake>sampler: WeightedIndex

Output

selection: ValidatorStake

Steps

# The seed is used as an entropy source for the random numbers.

# The first 8 bytes select a block producer uniformly.

uniform_index = int.from_bytes(rng_seed[0:8], byteorder='little') % len(validators)

# The next 16 bytes uniformly pick some weight between 0 and the total

# weight (i.e. stake) of all block producers.

let uniform_weight = int.from_bytes(rng_seed[8:24], byteorder='little') \

% sampler.weight_sum()

# Return either the uniformly selected block producer, or its "alias"

# depending on the uniformly selected weight.

index = uniform_index \

if uniform_weight < sampler.no_alias_odds[uniform_index] \

else sampler.aliases[uniform_index]

return validators[index]

Algorithm for selecting producer of block at height

Input

h: BlockHeight- Height to compute the block producer for

- Only heights within the epoch corresponding to the given block producers make sense as input

block_producers: Vec<ValidatorStake>(output from above)block_producer_sampler: WeightedIndexepoch_rng_seed: [u8; 32]- Fixed seed for the epoch determined from Verified Random Function (VRF) output of last block in the previous epoch

Output

block_producer: ValidatorStake

Steps

# Concatenates the bytes of the epoch seed with the height,

# then computes the sha256 hash.

block_seed = combine(epoch_rng_seed, h)

# Use the algorithm defined above

return select_validator(rng_seed=block_seed, validators=block_producers, sampler=block_producer_sampler)

Algorithm for selection of chunk producer at height for all shards

Input

- (same inputs as selection of block producer at height h)

num_shards: usizechunk_producer_sampler: Vec<WeightedIndex>(outputs from chunk-only producer selection)validator_shard_assignments: Vec<Vec<ValidatorStake>>

Output

chunk_producers: Vec<ValidatorStake>ith element gives the validator that will produce the chunk for shardi. Note: at least one of these will be a block producer, while others will be chunk-only producers.

Steps

bp = block_producer_at_height(

h + 1,

block_producers,

block_producer_sampler,

epoch_rng_seed,

)

result = []

for shard_id in range(num_shards):

# concatenate bytes and take hash to create unique seed

shard_seed = combine(epoch_rng_seed, h, shard_id)

# Use selection algorithm defined above

cp = select_validator(

rng_seed=shard_seed,

validators=validator_shard_assignments[shard_id],

sampler=chunk_producer_sampler[shard_id]

)

result.append(cp)

# Ensure the block producer for the next block also produces one of the shards.

# `bp` could already be in the result because block producers are also

# chunk producers (see algorithm for selecting chunk producers from proposals).

if bp not in result:

# select a random shard for the block producer to also create the chunk for

rand_shard_seed = combine(epoch_rng_seed, h)

bp_shard_id = int.from_bytes(rand_shard_seed[0:8], byteorder='little') % num_shards

result[bp_shard_id] = bp

return result

Transactions in the Blockchain Layer

A client creates a transaction, computes the transaction hash and signs this hash to get a signed transaction. Now this signed transaction can be sent to a node.

When a node receives a new signed transaction, it validates the transaction (if the node tracks the shard) and gossips about it to all its peers. Eventually, the valid transaction is added to a transaction pool.

Every validating node has its own transaction pool. The transaction pool maintains transactions that were either not yet discarded, or not yet included onto the chain.

Before producing a chunk, transactions are ordered and validated again. This is done to produce chunks with only valid transactions.

Transaction ordering

The transaction pool groups transactions by a pair of (signer_id, signer_public_key).

The signer_id is the account ID of the user who signed the transaction, the signer_public_key is the public key of the account's access key that was used to sign the transactions.

Transactions within a group are not ordered.

The valid order of the transactions in a chunk is the following:

- transactions are ordered in batches.

- within a batch all transactions keys should be different.

- a set of transaction keys in each subsequent batch should be a sub-set of keys from the previous batch.

- transactions with the same key should be ordered in strictly increasing order of their corresponding nonces.

Note:

- the order within a batch is undefined. Each node should use a unique secret seed for that ordering to prevent users from finding the lowest keys, and then using that information to take advantage of every node.

Transaction pool provides a draining structure that allows it to pull transactions in a proper order.

Transaction validation

The transaction validation happens twice, once before adding it to the transaction pool, then before adding it to a chunk.

Before adding to a transaction pool

This is done to quickly filter out transactions that have an invalid signature or are invalid on the latest state.

Before adding to a chunk

A chunk producer has to create a chunk with valid and ordered transactions limited by two criteria:

- the maximum number of transactions for a chunk.

- the total gas burnt for transactions within a chunk.

To order and filter transactions, the chunk producer gets a pool iterator and passes it to the runtime adapter. The runtime adapter pulls transactions one by one. The valid transactions are added to the result; invalid transactions are discarded. Once one of the chunk limits is reached, all the remaining transactions from the iterator are returned back to the pool.

Pool iterator

Pool Iterator is a trait that iterates over transaction groups until all transaction group are empty. Pool Iterator returns a mutable reference to a transaction group that implements a draining iterator. The draining iterator is like a normal iterator, but it removes the returned entity from the group. It pulls transactions from the group in order from the smallest nonce to largest.

The pool iterator and draining iterators for transaction groups allow the runtime adapter to create proper order. For every transaction group, the runtime adapter keeps pulling transactions until the valid transaction is found. If the transaction group becomes empty, then it's skipped.

The runtime adapter may implement the following code to pull all valid transactions:

#![allow(unused)] fn main() { let mut valid_transactions = vec![]; let mut pool_iter = pool.pool_iterator(); while let Some(group_iter) = pool_iter.next() { while let Some(tx) = group_iter.next() { if is_valid(tx) { valid_transactions.push(tx); break; } } } valid_transactions }

Transaction ordering example using pool iterator

Let's say:

- account IDs as uppercase letters (

"A","B","C"...) - public keys are lowercase letters (

"a","b","c"...) - nonces are numbers (

1,2,3...)

A pool might have group of transactions in the hashmap:

transactions: {

("A", "a") -> [1, 3, 2, 1, 2]

("B", "b") -> [13, 14]

("C", "d") -> [7]

("A", "c") -> [5, 2, 3]

}

There are 3 accounts ("A", "B", "C"). Account "A" used 2 public keys ("a", "c"). Other accounts used 1 public key each.

Transactions within each group may have repeated nonces while in the pool.

That's because the pool doesn't filter transactions with the same nonce, only transactions with the same hash.

For this example, let's say that transactions are valid if the nonce is even and strictly greater than the previous nonce for the same key.

Initialization

When .pool_iterator() is called, a new PoolIteratorWrapper is created and it holds the mutable reference to the pool,

so the pool can't be modified outside of this iterator. The wrapper looks like this:

pool: {

transactions: {

("A", "a") -> [1, 3, 2, 1, 2]

("B", "b") -> [13, 14]

("C", "d") -> [7]

("A", "c") -> [5, 2, 3]

}

}

sorted_groups: [],

sorted_groups is a queue of sorted transaction groups that were already sorted and pulled from the pool.

Transaction #1

The first group to be selected is for key ("A", "a"), the pool iterator sorts transactions by nonces and returns the mutable references to the group. Sorted nonces are:

[1, 1, 2, 2, 3]. Runtime adapter pulls 1, then 1, and then 2. Both transactions with nonce 1 are invalid because of odd nonce.

Transaction with nonce 2 is even, and since we don't know of any previous nonces, it is valid, and therefore added to the list of valid transactions.

Since the runtime adapter found a valid transaction, the transaction group is dropped, and the pool iterator wrapper becomes the following:

pool: {

transactions: {

("B", "b") -> [13, 14]

("C", "d") -> [7]

("A", "c") -> [5, 2, 3]

}

}

sorted_groups: [

("A", "a") -> [2, 3]

],

Transaction #2

The next group is for key ("B", "b"), the pool iterator sorts transactions by nonce and returns the mutable references to the group. Sorted nonces are:

[13, 14]. Runtime adapter pulls 13, then 14. The transaction with nonce 13 is invalid because of odd nonce.

Transaction with nonce 14 is added to the list of valid transactions.

The transaction group is dropped, but it's empty, so the pool iterator drops it completely:

pool: {

transactions: {

("C", "d") -> [7]

("A", "c") -> [5, 2, 3]

}

}

sorted_groups: [

("A", "a") -> [2, 3]

],

Transaction #3

The next group is for key ("C", "d"), the pool iterator sorts transactions by nonces and returns the mutable references to the group. Sorted nonces are:

[7]. Runtime adapter pulls 7. The transaction with nonce 7 is invalid because of odd nonce.

No valid transactions is added for this group.

The transaction group is dropped, it's empty, so the pool iterator drops it completely:

pool: {

transactions: {

("A", "c") -> [5, 2, 3]

}

}

sorted_groups: [

("A", "a") -> [2, 3]

],

The next group is for key ("A", "c"), the pool iterator sorts transactions by nonces and returns the mutable references to the group. Sorted nonces are:

[2, 3, 5]. Runtime adapter pulls 2.

It's a valid transaction, so it's added to the list of valid transactions.

Again, the transaction group is dropped, it's empty, so the pool iterator drops it completely:

pool: {

transactions: { }

}

sorted_groups: [

("A", "a") -> [2, 3]

("A", "c") -> [3, 5]

],

Transaction #4

The next group is pulled not from the pool, but from the sorted_groups. The key is ("A", "a").

It's already sorted, so the iterator returns the mutable reference. Nonces are:

[2, 3]. Runtime adapter pulls 2, then pulls 3.

The transaction with nonce 2 is invalid, because we've already pulled a transaction #1 from this group and it had nonce 2.

The new nonce has to be larger than the previous nonce, so this transaction is invalid.

The transaction with nonce 3 is invalid because of odd nonce.

No valid transactions are added for this group.

The transaction group is dropped, it's empty, so the pool iterator drops it completely:

pool: {

transactions: { }

}

sorted_groups: [

("A", "c") -> [3, 5]

],

The next group is for key ("A", "c"), with nonces [3, 5].

Runtime adapter pulls 3, then pulls 5. Both transactions are invalid, because the nonce is odd.

No transactions are added.

The transaction group is dropped, the pool iterator wrapper becomes empty:

pool: {

transactions: { }

}

sorted_groups: [ ],

When runtime adapter tries to pull the next group, the pool iterator returns None, so the runtime adapter drops the iterator.

Dropping iterator

If the iterator was not fully drained, but some transactions still remained, they would be reinserted back into the pool.

Chunk Transactions

Transactions that were pulled from the pool:

// First batch

("A", "a", 1),

("A", "a", 1),

("A", "a", 2),

("B", "b", 13),

("B", "b", 14),

("C", "d", 7),

("A", "c", 2),

// Next batch

("A", "a", 2),

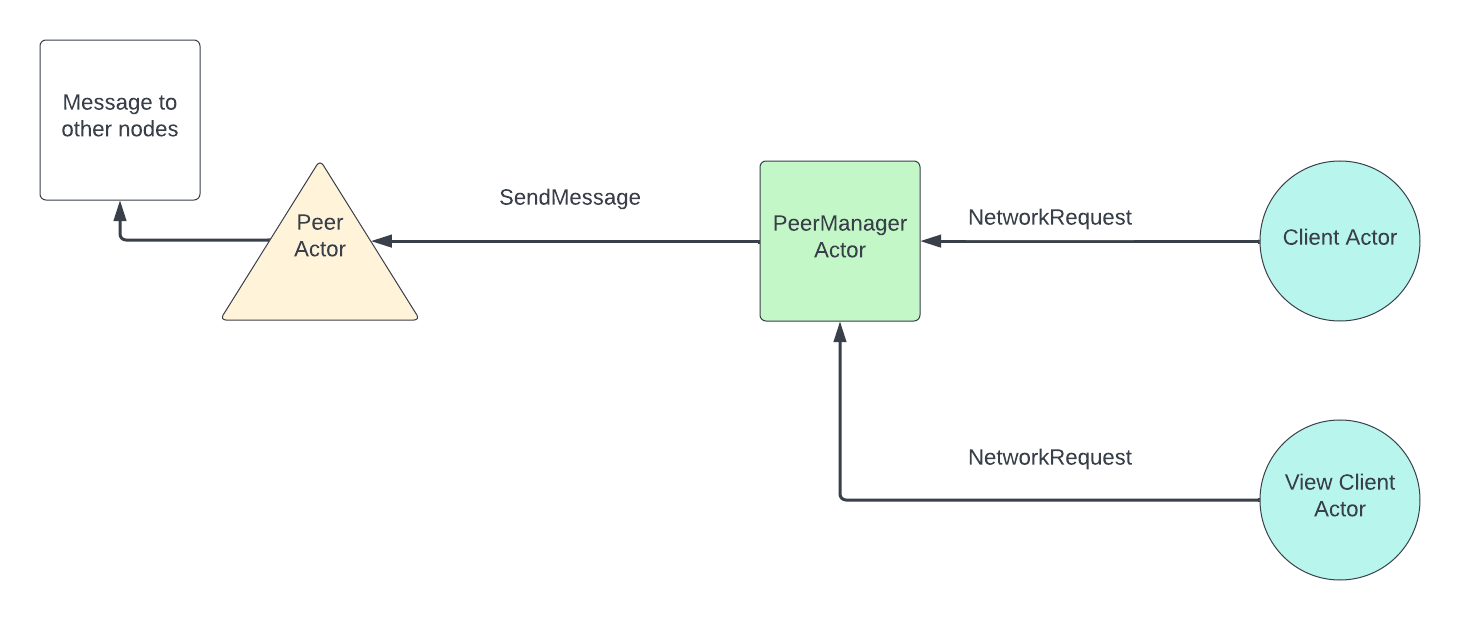

("A", "a", 3),